As of August 2026, the European Union’s Artificial Intelligence (AI) Act has entered full applicability for “high-risk” systems. This landmark legislation represents the world’s first comprehensive legal framework for AI, and its implications for the financial sector – particularly for migrant integration – are profound. For years, migrants and refugees have faced the “thin file” problem: a lack of local credit history that resets their creditworthiness to zero upon arrival. Fintechs have stepped in with Alternative Credit Scoring (ACS), using “digital footprints” such as utility payments, e-commerce behavior, and mobile wallet inflows to build a more dynamic picture of financial identity. However, the AI Act now classifies these very systems as high-risk, mandating a rigorous shift from “black-box” models to “Trustworthy AI”.

The High-Risk Classification: Why Credit Scoring is Targeted

Under Annex III of the AI Act, AI systems used to evaluate the creditworthiness of natural persons or establish their credit scores are designated as high-risk. The European Commission recognizes that these systems can “appreciably impact livelihoods” and perpetuate “historical patterns of discrimination” against marginalized groups, including migrants. If an algorithm inadvertently uses proxies for ethnicity or socio-economic status, it can systematically deny essential services to newcomers.

Technical Compliance: The “Big Five” Requirements

For providers (developers) and deployers (banks/fintechs) of credit scoring AI, compliance is no longer a checklist but a continuous lifecycle process. To remain on the market in 2026, systems must meet five core technical standards:

- Risk Management Systems (Article 9): Organizations must establish a continuous iterative process to identify and mitigate reasonably foreseeable risks to fundamental rights. This includes testing the system under conditions of “reasonably foreseeable misuse”.

- Data Quality and Governance (Article 10): Training, validation, and testing datasets must be “relevant, representative, and free of errors”. Crucially, the Act allows for the processing of sensitive category data (like ethnicity) only for the purpose of detecting and correcting bias, subject to strict safeguards.

- Technical Documentation and Record-Keeping (Article 11 & 12): Detailed “instructions for use” must be provided to deployers, and the system must automatically generate logs of its operation to ensure the traceability of decisions.

- Human Oversight (Article 14): AI systems must be designed so that human agents can “intervene in or override” automated credit decisions. This prevents “automation bias” where staff blindly follow algorithmic suggestions.

- Accuracy and Robustness (Article 15): Systems must achieve appropriate levels of cybersecurity and perform reliably across diverse conditions.

Mitigating Algorithmic Bias: The Technical Deep Dive

In 2026, “fairness-aware learning” has moved from academic research into production. Financial institutions are increasingly adopting adversarial debiasing. In this model, an auxiliary adversarial network is trained to predict a protected attribute (like nationality) from the main model’s representations. The primary model is then optimized to minimize its prediction loss while simultaneously minimizing the adversary’s ability to infer that protected attribute.

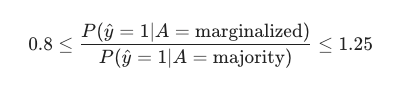

Mathematically, the goal is often to achieve Disparate Impact (DI) parity, where:

To ensure these decisions are explainable to both regulators and migrants, tools like SHAP (SHapley Additive explanations) are used to quantify exactly how much each feature (e.g., “timely internet bill payments”) contributed to the final score.

Strategic Opportunities for Fintechs

While the compliance burden is high – with fines reaching up to €35 million or 7% of global turnover – the AI Act also creates a “trust signal”. Fintechs that successfully undergo Conformity Assessments and register in the EU high-risk AI database can prove to institutional investors that their alternative data models are non-discriminatory. This regulatory clarity is expected to unlock new tranches of social impact capital for migrant lending, as investors can be assured of the “S” (Social) integrity of their portfolios.